Ranting About Bloat

While this web site has always been fairly light-weight, especially as far as web sites go recently, there has been a few lingering bits I was not happy with which I've now finally addressed:

- Removal of Google Analytics. Because who cares about that crap, especially for a personal web site.

- Removed use of custom web fonts. I initially added them because they were the old-school IBM PC fonts which I thought fit the theme of my usual posts here... but ... honestly, who cares.

- Removed all Javascript /

<script>tags. Was only being used to include and invoke Highlight.js for code snippet syntax highlighting. This has been replaced with server-side rendering equivalents.

If I want to get really nitpicky about things, this website currently has four separate CSS files that are being included, but they are all quite small and I don't want to go through the effort of running some preprocessor step that minimizes and concatenates all the CSS files together to address this "properly." Longer term, I will probably just re-write all the CSS styles into something specifically tailored for what I need for this web site (currently it's a bit of a mish-mash of a CSS theme I found with a bunch of customizations layered on top).

There have been a number of very good posts over the past couple years written by other more prominent names in the tech-world talking about "web bloat." It's something that I very much agree with, and part of the reason for the above changes was to "do my part" here, heh.

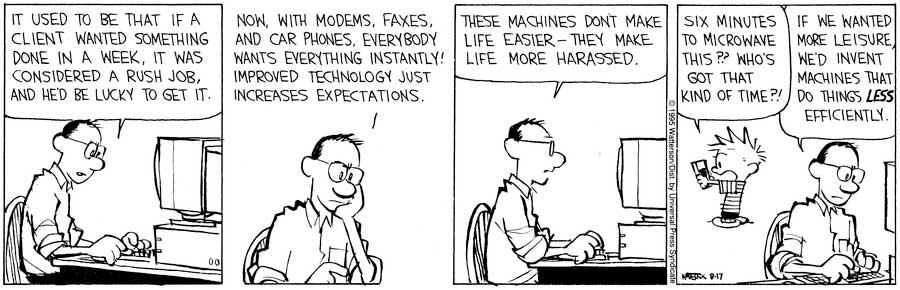

The recent release of new Apple hardware using their own custom ARM CPUs has been exciting news as of late. And the benchmark results of that hardware has been quite impressive. But my inner pessimist, upon seeing these benchmarks, cannot help but think "great, now a bunch of shitty programmers can get away with writing even more sloppy code."

I really do think this has been a problem that just keeps getting worse and worse. Developers don't care that much about writing efficient code, or in the somewhat rare event that they do, they very often are not able to make improving efficiency a priority over other tasks that produce more "visible" results (e.g. adding new features). Because increasingly as a society, we just want more and more, and we want it yesterday.

So, hardware keeps getting faster and faster, but our code almost seems to keep getting slower and slower. Of course, it's not all black-and-white like that, and in fact, there are lots of areas you could point out where code is getting faster and better. I think actually the problem is two-fold. People writing slow/inefficient code and/or writing code for slow/inefficient frameworks or runtimes.

There are obvious examples of "overkill" solutions to problems that many could point out. One big example is the pervasive use of Electron to build desktop applications. There are obvious upsides to using Electron (cross-platform out-of-the-box, easier to have a web version as well as a "desktop" version, etc), but it just seems so ridiculous to me that we're even in this situation where its use is seriously considered by developers. The idea of spinning up a heavy-weight web browser taking up probably a hundred or so megabytes on disk to run individual desktop applications (and each one, using its own separate instance of that embedded web browser) is crazy to me.

Part of my day job (or I guess it is my previous job now, since I left it two weeks ago, heh) entails maintaining

internal web services used by other applications in the organization. For this, many developers would probably today

reach for something like Postman or Insomnia to do testing of the

various endpoints in those web services. Indeed, this was the route I originally took. However, a number of our web

services returned large-ish responses, in many cases by necessity just due to the large amount of data that would be

needed by applications. It was really surprising to me how soon you would notice lag within both Postman and Insomnia

when dealing with a large JSON response. I remember being annoyed when I first ran into this, thinking that the web

services I was testing were slow for these requests and then, without thinking, immediately investigating at the web

service itself to see what was running so slowly. Not finding any problem at all when executing the same types of

requests via curl directly, I came to realize that actually it was Postman taking a long time to render the response.

I can only assume, due to the use of Electron. This was somewhat of a "final straw" event for me, as I quickly forked

over the cash to purchase a license for Paw as it is a native application that does basically all

the same things that Postman and Insomnia do, but it is quite performant.

Now, not all Electron applications are terrible and horribly slow. While I do not use it myself, I've always heard great things about the performance of VS Code. But I wonder why VS Code seems to be the exception rather than the norm? I don't have an answer for that obviously, I just have my personal beliefs as mentioned above, about people writing sloppy code to get it shipped faster.

IntelliJ is another tool that I use very frequently. While it is an amazing IDE for JVM development, it is a pig, no doubt about it. Not even an Electon application! But, just like an Electron application, it takes a while to start up, even on new hardware (I mean, they even have a loading bar during startup ... clearly Jetbrains has just accepted that it is going to have a slow startup and you better learn to like it!), and it consumes a bunch of RAM.

This is very much an apples-to-oranges comparison, but there is a very good reason that I am happy to be a paying customer for Sublime Text. No, it's not a full-blown IDE with all the bells and whistles of IntelliJ, but the developers of Sublime Text clearly always have performance in mind, as it starts up within a second or two even on 10 year old hardware, and its RAM usage is fairly light. And it's a native application of course.

I feel like solid-state storage becoming common-place didn't help things either. Back when we were all using spinning-rust disk drives, you couldn't really go nuts with loading tons of data off disk, at least, not without a very obvious drawback. But now that many (most?) people are using computers with solid-state storage, it provides yet another avenue for developers to be lazy. Now it feels almost like you need to have solid-state storage to get acceptable levels of performance! Quite the change from before where it was a super nice, but not necessary, speed boost.

Honestly, I would love to see more developers using older hardware for their main development machine. I know it'll never happen, but I cannot help but wonder how much would change if developer workstations were all limited to say, 8GB of RAM at the very top-end, and some spinning-rust hard disk? I'd like to think a lot would change as the developers would get sick of waiting for their application to load each time they go to test something out, but maybe that's wishful thinking.

The move to "the cloud" I don't think has really helped either. Sure, it makes distribution simpler versus getting end-users to update a local install of your application. But there is a performance cost to putting the equivalent application functionality in a browser. Some of this cost can obviously be run server-side (depending on the specifics of the application of course), but that still doesn't remove the performance cost of keeping the user interface from feeling sluggish at all.

Another anecdote from a previous application I worked on at a previous job. We had written what was essentially a glorified "CRUD" style web application that had a bunch of highly specialized business rules built-in and was able to interface with several other databases and web services to pull in related data for users filling out a form within the application. I can vividly remember that during development, even on heavy forms, this application felt merely "ok" from a "snappy user interface" perspective. In many areas of a typical form it felt fine and responsive enough, but pretty much anywhere you were dealing with tabular data especially, you would notice some lag or slowness. Now, this was running on a developer workstation. The actual end-users of this application in the organization were usually using lower-spec hardware. In many cases, much lower-spec hardware. So any slow-down I would see from my end would almost certainly be magnified somewhat from the end-users perspective.

The thing is that I can remember at multiple points during development of this web application, thinking back to my days fiddling around with Visual Basic 6. I'd think to myself "This application is all just basic data entry. Everything we're doing here would undoubtedly be possible to do with that 20 year old software, and it would probably run faster than this." Of course, that's not a totally fair comparison. For one, as I've already mentioned, the move to web applications has made distribution to end-users much simpler. But when you get right down to it and look at the application itself, there was no fundamental reason that our web application really needed to be a web application. The irony to me about this, is that at that organization there is a very widely used desktop application that is fairly heavy already and there has been talk for years about converting it to a web application (using much of the same internal code and framework that was built for the other web application that I worked on). The size of data being handled by this other desktop application is significantly larger than the web application I worked on, and so the idea of converting it to be a web application too just seems so completely ridiculous to me given the obvious performance issues I have witnessed with the existing web application. The thing is that I am sure that many of these performance issues could probably be solved in time. But the developers will never be given the time. And the end-users will ultimately suffer. Ugh.

It is really baffling to me why so many web sites today need to serve up literally megabytes worth of Javascript. Not even web applications, but web sites. Web applications doing that I can understand (within reason). But web sites? You know, where you go to read text usually? Like your local news web site. Or tech blog. Or whatever else along those lines. Sure, images can take up a lot of bandwidth, especially for higher quality images, but why do web sites serving up predominantly text articles need to be developed using a SPA-style architecture requiring hundreds of kilobytes to several megabytes (in the worst, but not totally uncommon, cases) to just show me a text article? I mean, what the fuck?

Nowadays, I find that I am all too willing to go out of my way and pay for software (even if there is a free alternative) that is obviously developed by people who share my ideals and disdain for the "bloat" that has been permeating our industry for a while now.

At least as far as native desktop applications go, I feel that the future looks fairly bleak. The rising popularity of Electron with developers, as well as the move to "the cloud" by large organizations, would seem to mean that there is little incentive for companies like Microsoft to keep producing good desktop UI development support. Somewhat of a self-fulling prophecy I guess. Developers use stuff like Electron because it makes it "easier" to develop desktop applications so companies are less incentivized to make better, easier to use, more performant/efficient software tools that those developers might otherwise want to use. Sometimes it feels like Apple is the only company still producing somewhat decent native application development tools, and trying to actively improve on things in that space. I've been mostly removed from the Windows-world (by choice) for a long while now, so perhaps my perception is a little bit off here.

Anyway, I unfortunately don't have any insightful conclusion or anything to put in this post. Just ranting in a fairly unorganized manner! And I'm sure that my rant has a great many logical holes in it. For all the hardware improvements we see year after year, it feels like the software side of things lags behind in many ways. It disappoints me when I think about it and wonder how much better things could be if developers thought just a little bit more about performance and efficiency.